Argument mapping with LLMs

Two recent studies looked at the role that large language models (LLMs) could play to support argumentation. Both give an interesting glimpse into how the technology might be put to use to support critical thinking.

LLM-assisted argument mapping

In the first study [1], students followed a course in which they prepared for a debate. Some of them were tasked to use argument mapping for this preparation, which is a well-known technique to help students gain insight into the structure of arguments. There are good tools to support this learning, such as Rationale and Kialo and it is known that practicing with such tools can boost reasoning and critical thinking [2].

The argument mapping students did so in teams, and worked asynchronously in a digital environment. This allowed the researchers to track their argumentative moves. Meanwhile, another group also worked online and asynchronously, but created a linear text to structure their debate argument. A final flourish was that the argument mappers could use ChatGPT during their process, while the linear writers could not.

This design choice is a bit of a shame, because it's impossible to isolate the influence of LLMs from that of the argument mapping practice, and we already know argument mapping is effective. However, the study still yielded some valuable insights.

First of all, as the discourse between students was coded to qualify both argumentative moves and the display of critical thinking skills, the researchers could link the two. For critical thinking skills, they used Murphy's framework, which was developed to analyse thinking skill in online, asynchronous discussion[3] and which breaks down critical thinking skills into recognising a problem, understanding evidence and perspectives, analysing information, evaluating information and creating new knowledge or perspectives.

What they found was that for the linear writing teams, the distribution of critical thinking skills did not really vary with the complexity of argumentative moves and was relatively low-level. The LLM-assistent argument mappers, however, showed more higher-order critical thinking skills as the complexity of argumentative moves became higher. To make this just a bit more concrete: once the argumentative moves were, for example, about rebutting feedback given on the supportive response to a initial argument, the students would also show more evaluative and creative thinking, as defined in Murphy's framework.

Secondly, besides appreciation for the argument maps as a tool for visualisation, the students were also happy to use ChatGPT as it made them appreciate the process of argumentation and gave them examples and ideas to work with. They also insisted that ChatGPT was not always accurate or reliable, but as the students has been trained on prompting and had received lectures about the debate topics, they were well-positioned to remain vigilant.

In other words, this study suggests that there are didactic models in which LLMs enable students to engage in critical thinking. While the argument maps may have done the heavy lifting in terms of boosting argument quality, the LLM possibly kept the students engaged in what can otherwise be a stagnating activity.

ArgLLMs for explainable AI

The big problem of LLMs remains that we use them for knowledge work, but since they are weaving together linguistic strands, using symbols without grounding them, they are not reliable when they are simulating reasoning. Because of this, we want them to "show their work".

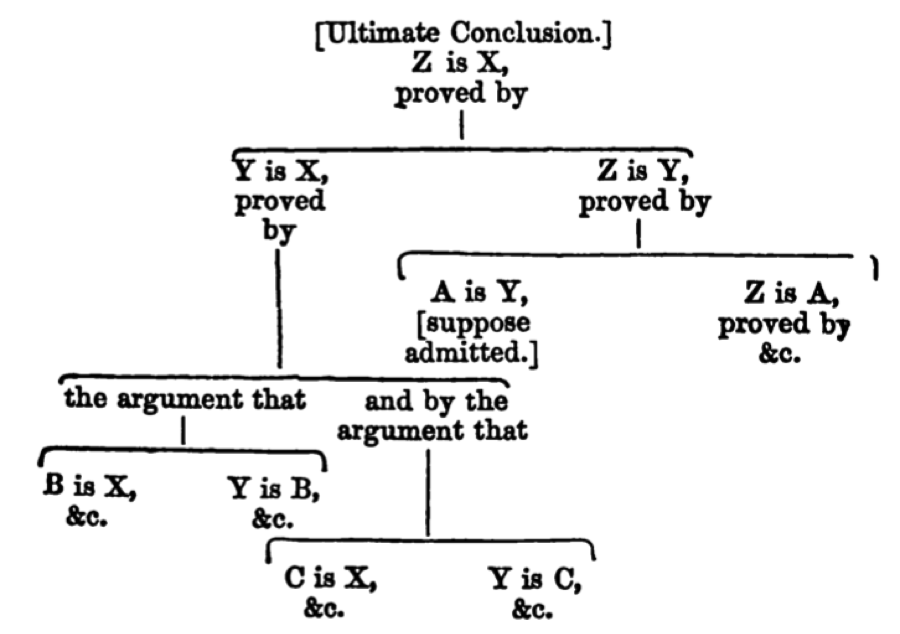

ArgLLMs are a proposal for LLMs that do exactly this, in the same way you would train a student to be more explicit about reasoning: by building an argument map[4]. ArgLLMs are an attempt to make LLM output both explainable and contestable, visualising the argumentation and enabling external agents to challenge it.

What ArgLLMs do is provide arguments in favour and against a particular claim. They also quantify the strengths of supportive and opposing arguments, so that they can assess the strength of the claim overall, based on the evidence under consideration. To check whether they are actually capable of this, researchers constructed four variations of an ArgLLM and tested their performance using claim verification datasets, such as StrategyQA. The ArgLLMs, which are built on top of existing LLMs, were prompted to build an argument and to estimate strengths of connections between constituent claims. Then, from these, a conclusion was drawn and the whole argumentation was visualised as an argument map.

When comparing the claim verification prformance of the four variant against chain-of-thought prompting, the ArgLLMs were deemed to perform well, so no accuracy was lost (or gained) as compared to regular methods. But in contrast to existing approaches, the responses were more easy to verify and adapt. See for example the following reasoning, initially attacking the claim, but then supporting it after it was contested via human intervention:

The red text is typical LLM nonsense, but initially the argument was counted as a strong attack on the claim. After it was contested, the claim was quantified as weaker, thereby changing the system output.

As this example shows, even ArgLLMs do not "reason" in any meaningful way. We would not accept this in an argument map of a student -- why is the argument still there, albeit more weakly? But the breakdown offered by ArgLLM does make this weakness more salient, and allows human decision-makers to think critically about machine output. One can imagine the ArgLLM is even more useful if the argumentation map is constrained to particular resources, such as specific videos or articles.

Argumentation training with LLMs

Taken together, these two studies suggest a future in which LLM use and argument mapping are integrated practices. As students scaffold their reasoning, LLMs can provide valuable input and maintain an argument map, which can be contested and revised by the students. Since this process is engaging and does draw on higher-order skills, it is good practice for critical thinking, especially if the team approach is maintained so that the critical thinking remains a social activity.

References

Chen, X., Jia, B., Peng, X., Zhao, H., Yao, J., Wang, Z., & Zhu, S. (2025). Effects of ChatGPT and argument map (AM)-supported online argumentation on college students' critical thinking skills and perceptions. Education and Information Technologies, 1-36. ↩︎

Van Gelder, T., Bissett, M., & Cumming, G. (2004). Cultivating expertise in informal reasoning.Canadian Journal of Experimental Psychology/Revue canadienne de psychologie expérimentale, 58(2), 142. ↩︎

Murphy, E. (2004). An instrument to support thinking critically about critical thinking in online asynchronous discussions. Australasian Journal of Educational Technology, 20(3). ↩︎

Freedman, G., Dejl, A., Gorur, D., Yin, X., Rago, A., & Toni, F. (2024). Argumentative large language models for explainable and contestable decision-making. arXiv preprint arXiv:2405.02079. ↩︎

Member discussion