Not as human: why I fear the effect of LLMs

There is something very weird about large language models that goes beyond their uncanny performance. While I see many people being spooked by what seems to be a ghost in the machine, it is rather their lack of agency that disconcerts me. They generate utterances and we call those language, but it is different from any language that came before.

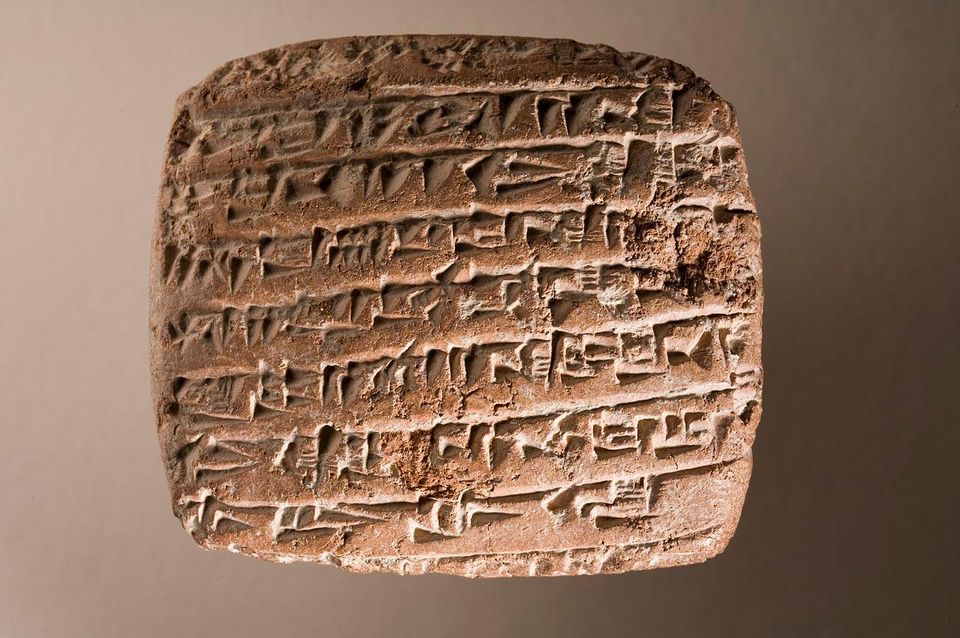

Writing, the original langtech, was already violence against language. It decontextualized words and robbed readers of their possibility to respond, to voice or embody their emotions or thoughts. But writing at least respected one core idea about language – that there was someone speaking. In large language models, the words of millions are used for the voice of no one.

I am not a linguist and so perhaps my thoughts on language are naïve. They have been shaped by raising a child, who had to communicate through cries and shouts with parents who, high on oxytocin or whatever it is, were especially attuned to each minor nuance in the shape of the screaming. We learnt which shouts meant poop and which meant food and in both cases we learnt to estimate how much.

We reciprocated the screams with simple utterances that carried slightly more details than the screams did. Language became a dance – phonetically, syntactically and semantically – escalating towards more structure, more resolution and more nuance. And while we may have been leading the dance, the real driver was our child's urge to get a more fine-grained grip on those around her.

Because that is what language offers. Our arms and legs may allow us to move objects, but our language allows us to move minds. It is an appendage into the social world. It is through our words that we demand, request, question, convince and persuade others. This is not a unidirectional thing – all of us are also sensitive recipients of language and cannot help to infer mental states and intentions from utterances. This dynamic is predicated on the notion that language exists for humans to move humans.

Yet this notion will not remain. Large language models will be embedded everywhere. They will summarize meeting notes, rephrase messages, structure text that is not neatly structured, turn documents into presentation slides and the other way around, recombine information to suggest ideas and much, much more. A handy tool, you might say (and you would be right), but using it also means placing your expressiveness in the hands of machine that of itself has nothing to express.

Moreover, this machine is owned by a company that now stands between you and your ability to cause movement in the social world.

I've seen enthusiasts compare the introduction of large language models to the invention of fire. The idea behind this, I suppose, is that this technology is so pivotal that it will herald a new civilization. Now all inventions will of course share features with the invention of fire, but fire gave us resilience against the forces of nature – whether these were cold, darkness or digestive challenges – and LLMs will do no such thing. If they protect us from anything, it will be from putting too much of ourselves into linguistic utterances, so perhaps they are most comparable to earlier attempts to separate the personal from social exchange – like the invention of bureaucracy and professionalism.

Yet not putting our real selves into linguistic utterances is only a net good if we can contain this habit. I do not enjoy summarizing meeting notes and answering the same questions over e-mail 20 times per month, so anything that helps me avoid that is fantastic. But I also recall that, when SwiftKey first introduced me to the wonders of predictive texting, I would sometimes just go with the predictions, even if had intended to say something slightly different. The prediction was close enough, the lack of friction was pleasurable and when we express ourselves, we are often making it up as we go, anyway. So why not just say what the machine thought I wanted to say?

Of course, there will still be conversations with friends, with family and with strangers on the street, unmediated by technology and close to language in its original form. Yet as anyone who ever caught himself using corporate language at home can attest, it is not easy to escape workplace vernacular. So if our goal-oriented professional selves are bombarded with machine-mediated language, who is to say that we will not absorb their smug, matter-of-factly tone, their dispassionate balance and their unimaginative structure?

There are some who worry that machine cognition will bring a superintelligence to this world that will eradicate humanity. Even if you don't, there are harms involved in widely deploying LLMs, such as reproducing bias or cementing economic equalities. But the more I think about large language models, the more my concern is that even if humanity survives this, it will not be as human anymore.

Member discussion